We explore the idea of timed, one-on-one help sessions and find three minutes is usually enough time for novices to receive help from an expert software user.

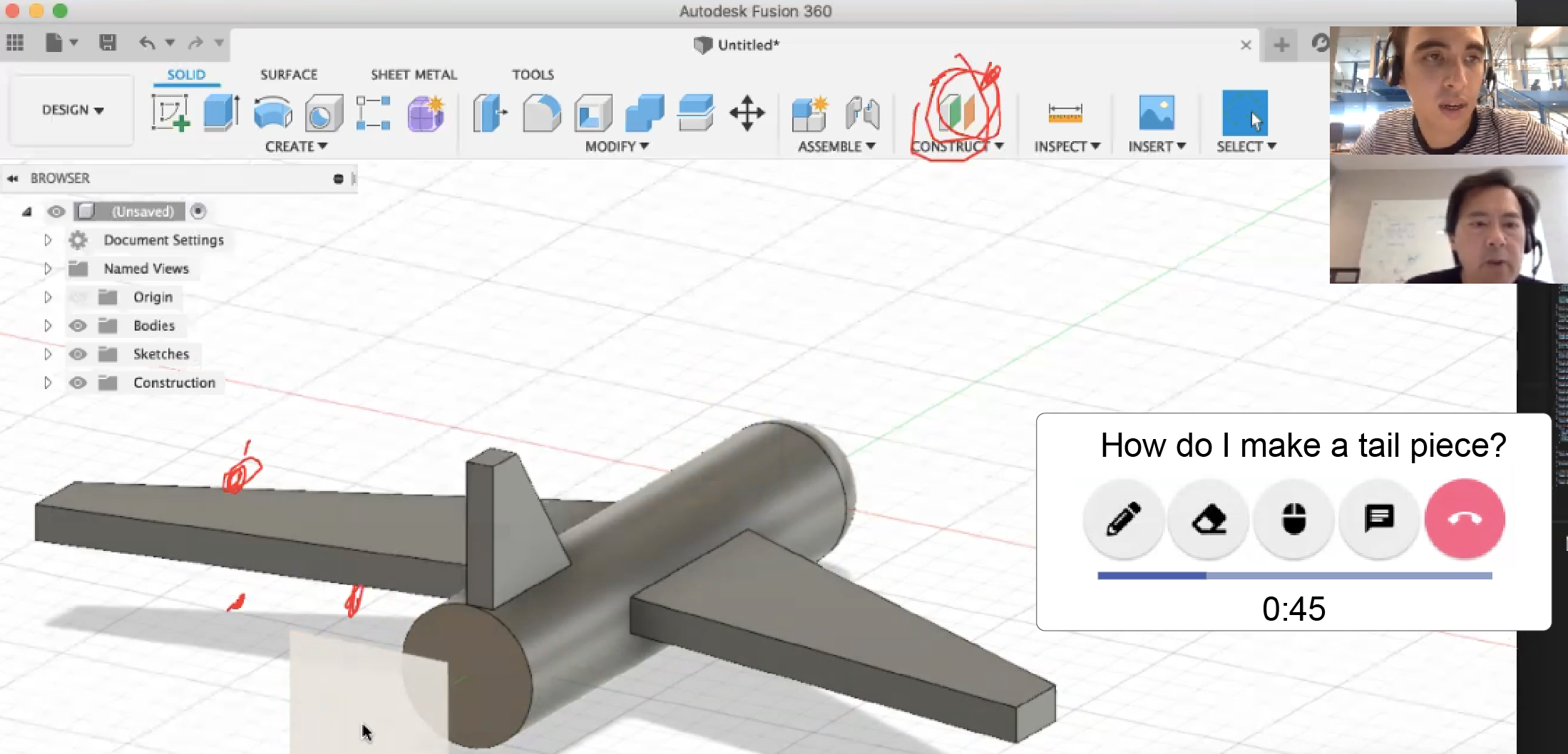

To facilitate this type of interaction, we develop MicroMentor, an on-demand help system that connects users via video conference for short, 3-minute help sessions.

Quick Facts

- We find that 3 minutes is often enough time for novice users to explain their problem and receive meaningful help from an expert.

- We created MicroMentor to facilitate short, one-on-one help sessions between software users. Users can request help by submitting a short screen and audio recording.

- MicroMentor automatically captures and attaches contextual information, such as the user’s recent command history and expertise, to the request.

- MicroMentor automatically ‘ranks’ and notifies potential helpers that a request has opened. When the request is accepted, the asker and helper are automatically put in a video call, where they can interact for only 3 minutes.

- All help sessions are automatically recorded and transcribed, so others can view them.

- Participants were overall satisfied with these short help sessions, giving 5-star ratings for over 2/3 of all requests.

Why would we ever want to do this?

Feature-rich software (like Photoshop, Excel, or Fusion 360) can be difficult to learn and “over-the-shoulder” learning (i.e. informal help between colleagues in a workplace) is one of the most common and preferred help-seeking strategies.

Over-the-shoulder learning rarely occurs outside formal working environments. Some paid systems (like Codementor) try to mimic over-the-shoulder learning by connecting developers for video conferences. Together, they collaborate with one another using a shared code editor. However, even when one-on-one help is readily available, people are reluctant to ask for help, due to embarrassment or a fear of disturbing others.

How can we make help-seeking less daunting?

Microblogging fulfills a need for an even faster mode of communication. By encouraging shorter posts, it lowers users’ requirement of time and thought investment for content generation.

We have become accustomed to consuming small ‘tidbits’ of information (raise your hand if you found this post through Twitter ✋). In fact, the restrictive nature of services like Twitter (280-character limit) can encourage more use: there is less of a barrier to creating content, and it incentivizes getting to the point.

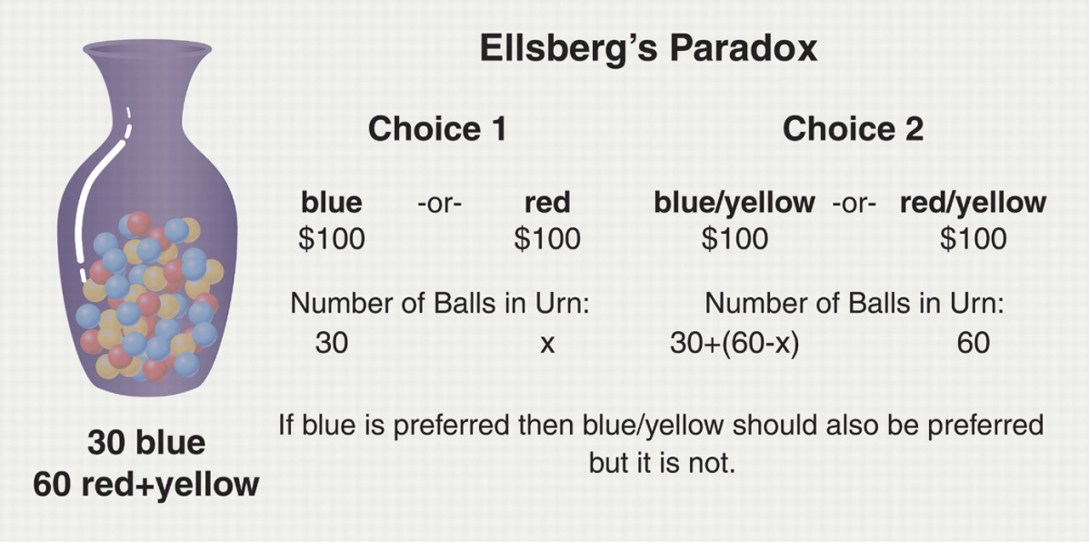

As people, we also hate ambiguity and taking risks - theories like the Ellsberg paradox state we prefer to bet on specific, known odds (even if they’re low) over ambiguous probabilities. So, we “prefer the devil we know,” over taking on unknown risks.

The more we know about something—including precisely how much time it will consume—the greater the chance we will commit to it.

Simply knowing more information (i.e. avoiding uncertainty) can influence our behaviour, and this is true when it involves time. To-do lists with time estimates can reduce procrastination, and written articles with estimated read times can increase engagement.

So, “restrictions” and uncertainty can influence our behaviour. How could we leverage this information to support one-on-one software help sessions? Could a time-limit help encourage more help-seeking?

Are timed help sessions effective?

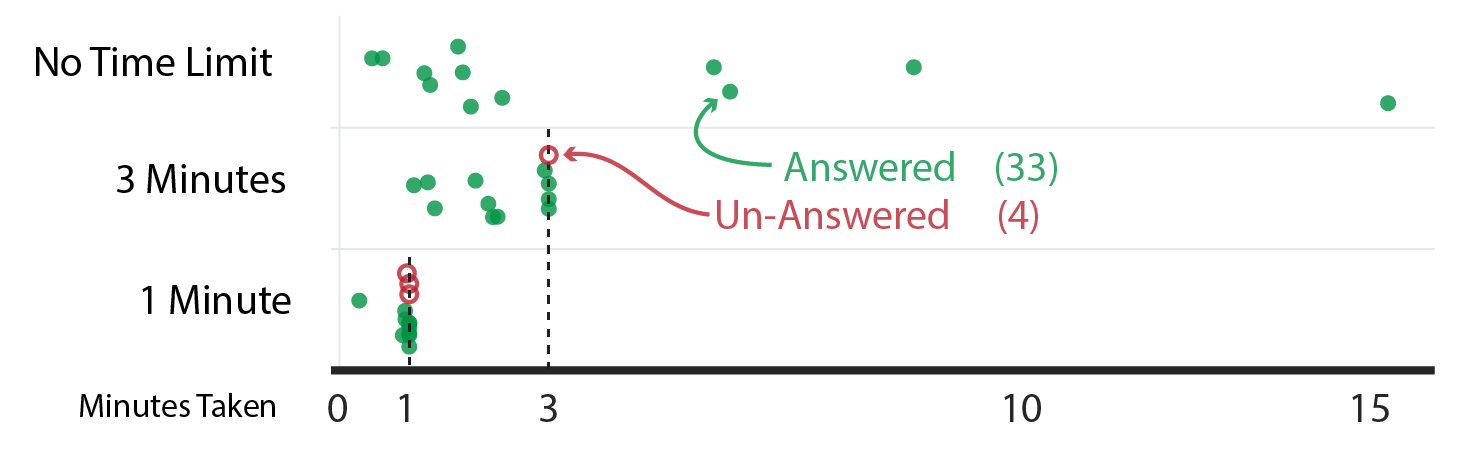

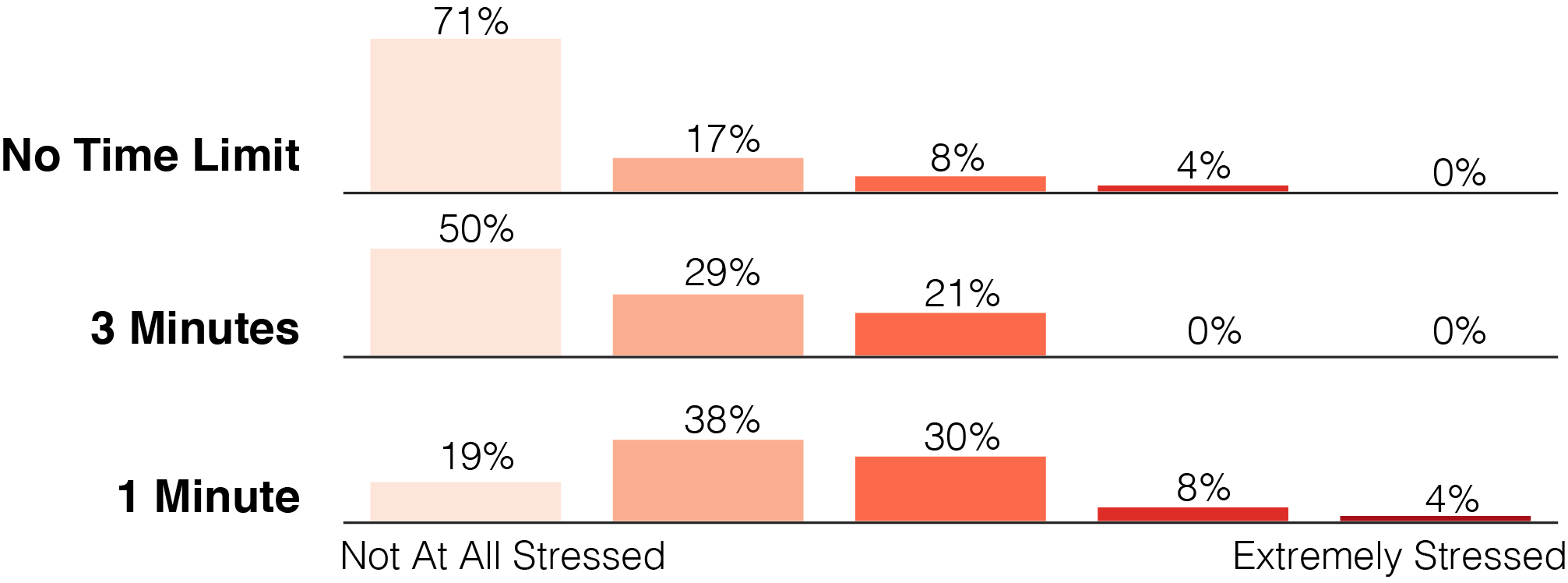

To better understand the effectiveness, opportunities and challenges of rapid one-on-one software help sessions, we conducted a formative study with 6 participants using Fusion 360. Three time-limits (1 minute, 3 minutes, no time limit) were tested.

Three participants were expert users, and three were novices. The novices were given an open-ended design task (design a car) and their only ‘resource’ was asking an expert for help. If help was requested, a random expert helper and time limit was assigned.

We conducted this study in-person to understand the “best-case scenario.” Participants completed short surveys after every help request and follow-up interviews after the experiment.

Results

Overall, 37 questions were asked and many were successfully answered in 3 minutes or less.

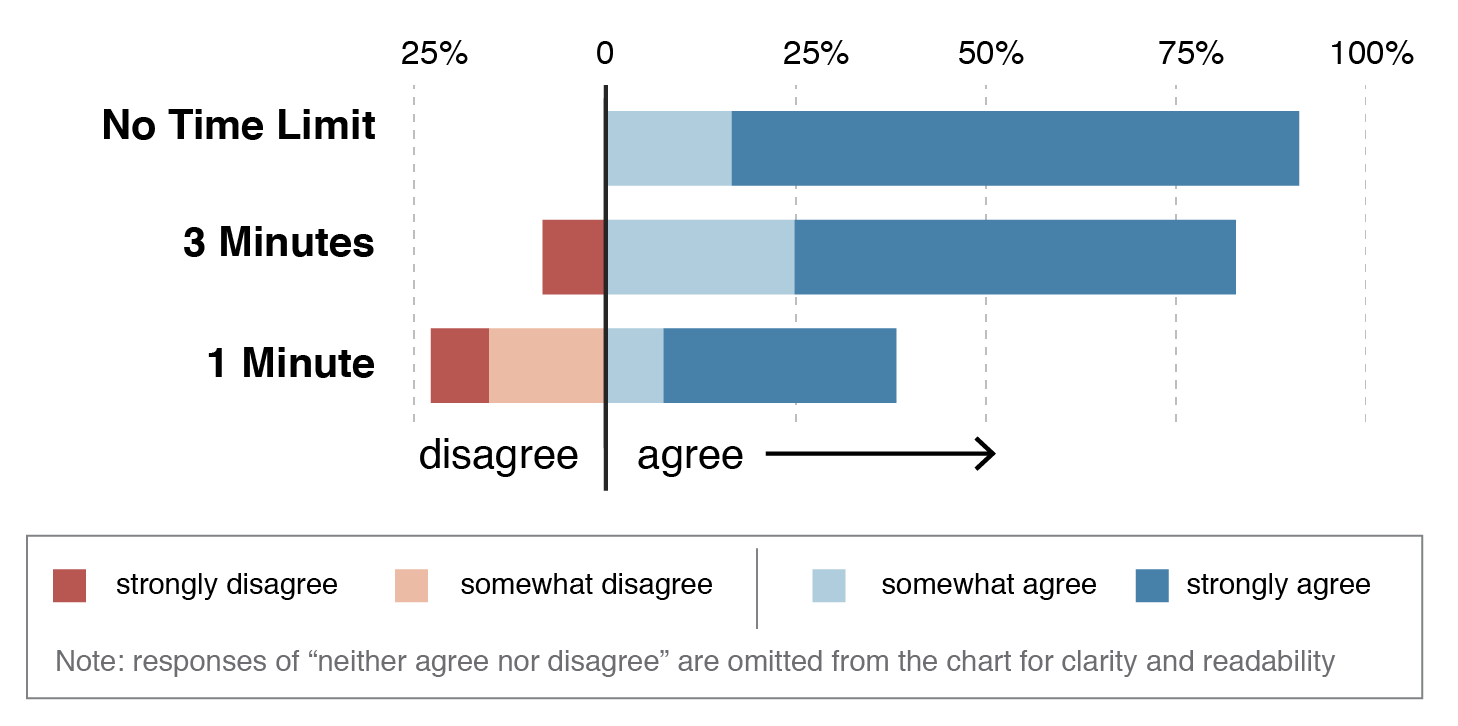

Having only 1 minute to answer a question is stressful, but 3 minutes may be less stressful.

Finally, askers felt questions were answered 92% of the time with no time limit, and 83% with 3 minutes. With 1 minute, askers felt their questions were answered only 39% of the time.

Interviews

Overall, participants felt 3 minutes was optimal: there was enough time to explain a problem, clarify, and receive a focused response.

- With limited time, there was more pressure to ask short, focused questions with little elaboration. However, helpers preferred these targeted questions.

- With 1 minute, helpers only had enough to show the quickest way of solving a problem (“band-aid solution”), but 3 minutes may allow for more complete solutions.

- Many askers felt no time limit was “unnecessary” or felt guilty using the helper’s time.

- Helpers may get the same questions over and over again. One asker felt pairing with a helper capable of responding was more important than the duration itself. How could a quick help system address these issues?

To summarize: 1 minute may be too short to receive quality help, but 3 minutes is not that much different from no time limit.

MicroMentor

MicroMentor automatically captures and attaches contextual information to each request. It encourages the best helpers to accept a request first and automatically starts and ends help sessions. All sessions are recorded to help a broader audience.

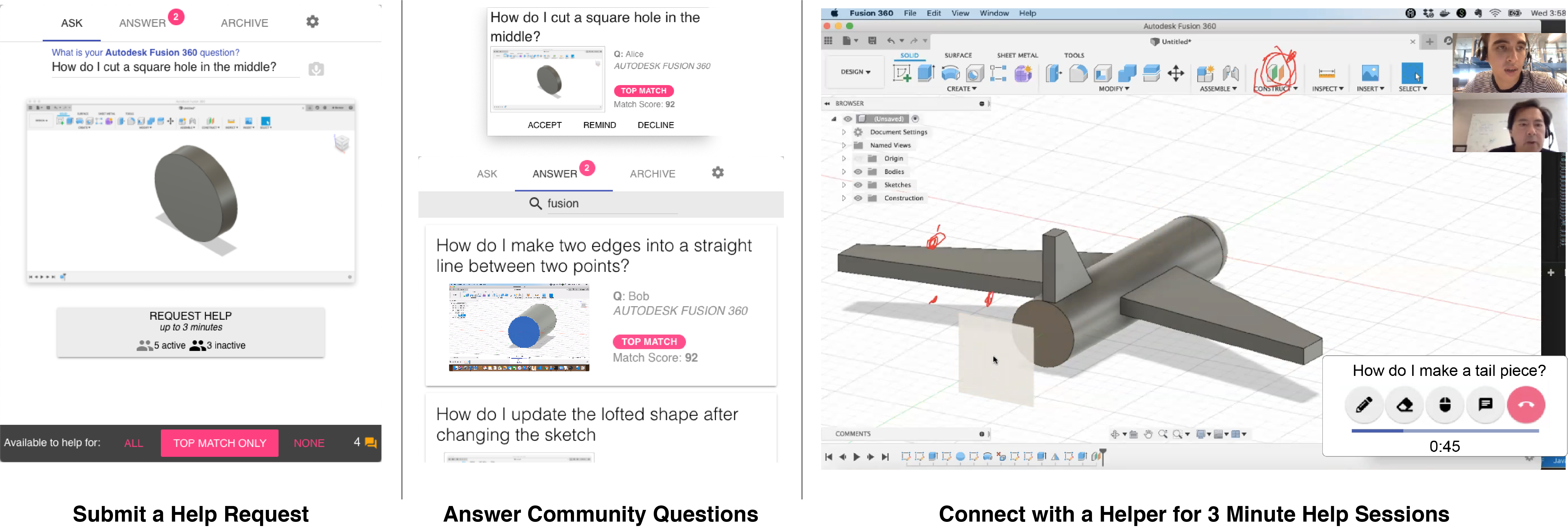

Submitting a Request

Users can capture a short 16s recording of their screen and audio, allowing them to explain problems in plain language within the context of the target application.

MicroMentor automatically transcribes the captured audio to save time in typing. If the user does not want to record their screen or voice, they can type their question. MicroMentor automatically captures and attaches a screenshot instead of a video.

The screenshots and screen recordings are automatically submitted with the help request, along with the user’s recent command history. Clicking the “Request Help” button sends the request to all available helpers (displayed in the “Request Help” button).

Mentor Matching

MicroMentor ‘ranks’ potential helpers by assigning a “match score” (out of 100) to each person using the following factors:

- command history

- expertise

- time since the last help session

- previous interactions with a helper

- average rating

- additional skills

- relevance of additional skills

MicroMentor uses a triaged approach, so that those with high match scores are notified first.

Accepting a Request

Help requests can be accepted through “push notifications” or by browsing through open requests in the “Answer” tab. Potential helpers can quickly view the request information, including the topic, recorded video, target application, and match score.

If the helper accepts the request, they are automatically added to a video conference with the asker. If they click “Remind,” MicroMentor will display the notification again after 10s if no one else accepted the request.

Users can adjust their notification preferences, so they are only notified when they have the highest match score.

Help Sessions

Once a request has been accepted, the asker is given a 5s “warning” that a meeting is about to start. A video conference automatically launches with screensharing, video, and annotation capabilities.

The asker and helper can interact with one another for 3 minutes. A timer shrinks as time passes, and becomes red when there are 30s left. With 10s left, it starts ‘flashing.’

After 3 minutes pass, the call automatically ends. The asker can give the session a ‘rating’ out of 5 stars, based on their satisfaction. If they were unsatsfied (rating of 3 or less), they can request a new helper.

Archives

All help sessions are automatically recorded, building a bank of knowledge that can help a broader audience.

Users can browse and search for relevant archives, using all contextual information (including the application name, commands, and transcription).

Clicking an archive opens a detailed view, showing the recorded video and a transcription of spoken audio and commands. Clicking a specific line of the transcription moves the video to that timestamp. Archives can be quickly shared by email.

Evaluating MicroMentor

Recall during our first experiment, participants could not access other help resources. This does not represent a true, real-world scenario, so we evaluate MicroMentor by comparing it to a baseline condition (online resources).

Rather than quantitative comparisons, we are more interested in eliciting qualitative feedback from participants to further validate the concept of quick help. The baseline is mainly included to provide participants a point of reference when discussing MicroMentor.

We recruited 12 participants (8 novices, 4 experts). Like the first experiment, the novices worked on an open-ended design task (design a boat or plane) in Fusion 360. Half of the time, they could only use online resources, and the other half, they could only seek out help using MicroMentor. The experts answered questions that came in through MicroMentor.

Results

Overall, participants took part in 40 help sessions using MicroMentor. During the baseline condition, askers made 65 help-seeking attempts, but askers often returned to the same resources multiple times, so it is unclear if these attempts were useful.

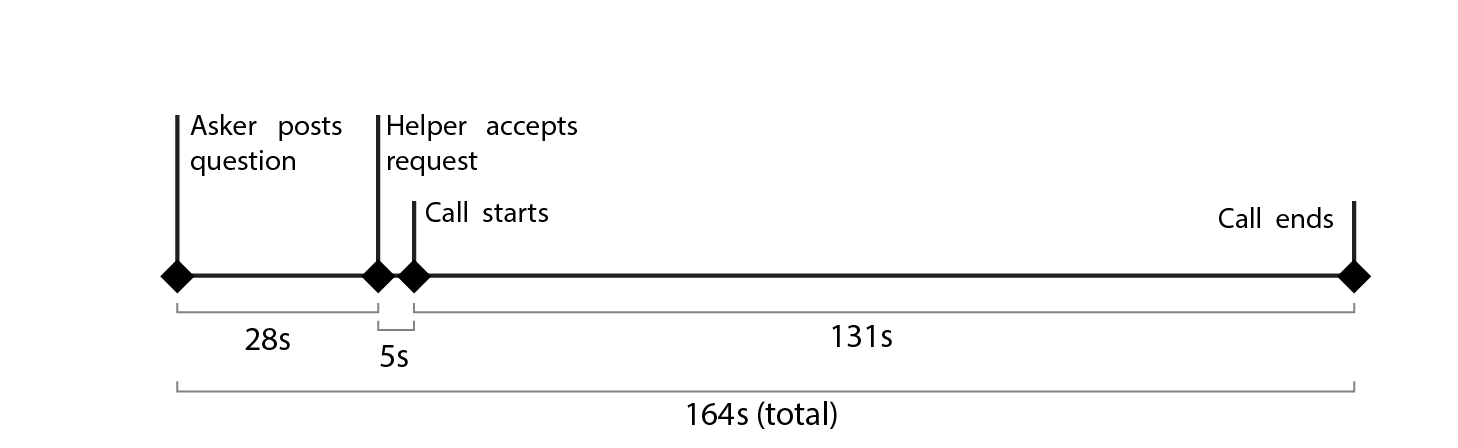

It took between 7s and 91s (28s on average) for a request to be accepted by a helper. The asker joined the call 5s later. The shortest help session was 33s and the average was 131s. The average request was 164s long.

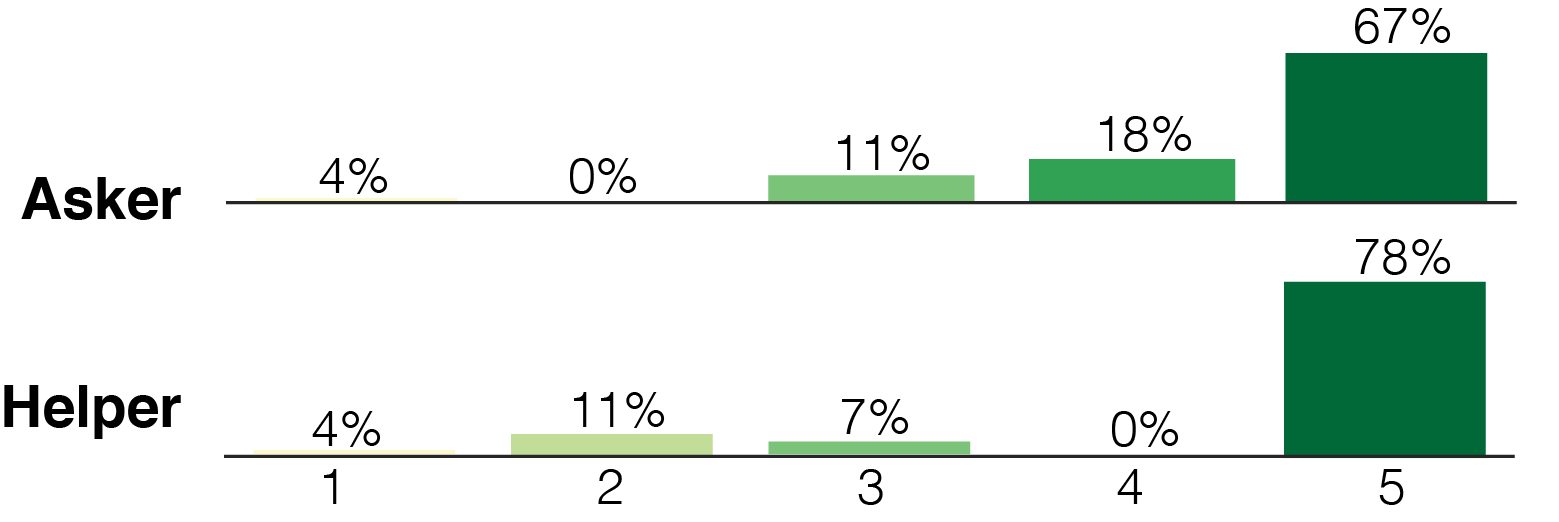

After every help session, askers and helpers gave a rating (out of 5 stars), based on their satisfaction with the response. Overall, participants were satisfied with these help requests, giving a 4 or 5 star rating 85% of the time. Participants gave 5 star ratings for over 2/3 of all requests.

Participants also thought MicroMentor was easy to use **and many mentioned they would use it frequently in their day-to-day work:

Seeking help independently usually results in general tutorials that may not cover the specifics of what you want to do and there is a lot of sifting through irrelevant information. Also finding the solution to your exact case is a matter of luck because it depends on someone else already having that issue and posting about it online. With the MicroMentor system, you can find an answer to the specific problem you’re having and get related tips or best practices to gain even more knowledge.

What’s next? What can we learn?

Overall, we contribute the idea of time-bounded, one-on-one help sessions and find 3 minutes is usually enough time for novice users to receive help from experts. We create MicroMentor, an on-demand system that reduces the transaction costs of finding and initiating quick help sessions.

We hope our work will serve as a foundation for future quick-help systems, and we believe there are many exciting avenues for future work:

- How else can we match askers and helpers? Our mentor matching algorithm could be improved, and there are many ways to route questions to helpers. For example, questions could be ‘triaged’ based on the topic. Alternatively, questions could be first shown to someone’s “buddy” to help foster long-term relationships.

- How can we better support novice helpers? We assumed experts would act as helpers but novices could also provide help. What additional tools could we provide to encourage novices to help their peers?

- How can we encourage broader participation? What ‘gamifications’ or additional incentives could MicroMentor provide to encourage more users to answer questions? Could companies provide additional incentives (like cloud credits)?

- How can companies use quick-help systems? How would a quick-help system compare to traditional call centres? How could the data gathered by MicroMentor be used to improve software applications? For example, if there are many questions about a particular tool in Photoshop, how could the company address this confusion in future software updates?

We hope more work will focus on turning short, micro-moments into big learning opportunities. Please read the paper for more details and feel free to contact us if you have any additional questions!

Publication

Nikhita Joshi, Justin Matejka, Fraser Anderson, Tovi Grossman, and George Fitzmaurice. 2020. MicroMentor: Peer-to-Peer Software Help Sessions in Three Minutes or Less. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Science (CHI 2020). DOI: https://doi.org/10.1145/3313831.3376230

BibTeX

@inproceedings {

Joshi2020MicroMentor,

author = {Joshi, Nikhita and Matejka, Justin and Anderson, Fraser and Grossman, Tovi and Fitzmaurice, George},

title = {MicroMentor: Peer-to-Peer Software Help Sessions in Three Minutes or Less},

year = {2020},

isbn = {9781450367080},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3313831.3376230},

doi = {10.1145/3313831.3376230},

booktitle = {Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems},

pages = {1–13},

numpages = {13},

keywords = {mentoring, software learning, quick help, one-on-one help},

location = {Honolulu, HI, USA},

series = {CHI ’20}

}

Presentation

Acknowledgements

We thank the User Interface Research group at Autodesk Research (Toronto) for their feedback and guidance. A big thanks to Rebecca Krosnick and Kimia Kiani for their help facilitating our two user studies.

Contact Us

Director of HCI & Visualization Research

Autodesk Research

george.fitzmaurice [at] autodesk.com

Nikhita Joshi,

Justin Matejka,

Fraser Anderson,

Tovi Grossman, and

George Fitzmaurice.

Autodesk Research,

University of Waterloo,

University of Toronto © 2020

Blog post template by Johann Wentzel.